Generating Custom Puzzles

This is made possible by cutting edge “deep learning” AI technology, specifically a system known as Stable Diffusion.

While you can often get very good results with very little effort, there are some useful tricks for writing successful prompts. (“prompt” is another word for the written description used to generate the image.) On this page you’ll learn some of the basics of generating great images, and you’ll find a bunch of examples as well as a list of resources where you can learn a lot more.

Prompt Writing Basics

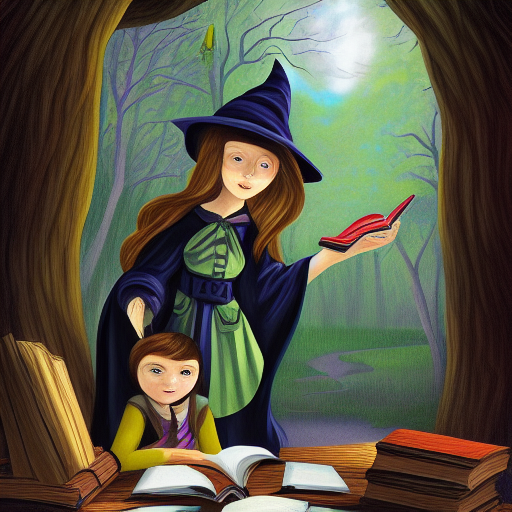

At its simplest, to write a prompt, all you need to do is state the subject of your image. For example, here’s an image generated from the prompt “A magical wizard school library with a young witch studying”:

A magical wizard school library with a young witch studying (seed 2845286636)

Note that the prompt included the setting (a magical wizard school library), the subject (a young witch) and an action (studying). Your prompts don’t need to include all of these; the AI will fill in whatever’s missing from its own ‘imagination’.

The AI cares about the order of the words you give it — put the elements you want to emphasize most toward the beginning of your prompt. In this case, by mentioning the library before the witch, the setting is emphasized.

If you include an action, keep it simple; the AI quickly gets confused by complicated actions. Likewise with your subject, the AI does much better when there is a single subject rather than multiple subjects.

So we’ve successfully generated an image of a young witch studying. But, it might not be in the style we want. The AI just chooses a style at random from the most common styles for this type of subject, unless we specify what style we’d like. How do we do that? The easiest way is by naming an artist (or more than one artist) whose styles we want the AI to draw inspiration from.

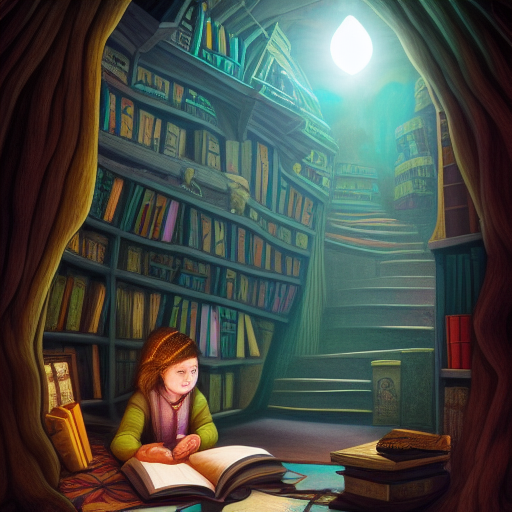

In the following example, I’ve used the same prompt but added “by Mary GrandPré, by Levi Pinfold” at the end. These are artists known for their work on the Harry Potter book series.

A magical wizard school library with a young witch studying, by Mary GrandPré, by Levi Pinfold (seed 2845286636)

By specifying the artists, the AI has created a similar image but incorporating some elements from those art styles. The characters are older, and the illustration style appears to be aimed at an older reader.

Another way to further specify the kind of image we want is to add modifiers. There are all kinds of possible modifiers; feel free to experiment with your own. In the following example, I’ve added the modifiers “vibrant, mysterious, intricate, highly detailed”:

A magical wizard school library with a young witch studying, by Mary GrandPré, by Levi Pinfold, vibrant, mysterious, intricate, highly detailed (seed 2845286636)

Again the resulting image is similar in many ways, but there’s a good deal more detail and a wider variety of colors.

Now I’ll add one more modifer, “cinematic lighthing”:

A magical wizard school library with a young witch studying, by Mary GrandPré, by Levi Pinfold, vibrant, mysterious, intricate, highly detailed, cinematic lighting (seed 2845286636)

This modifier changed the composition of the image dramatically, placing the subject in a deep, mysterious downstairs room. There’s nothing special about this particular modifier; any modifier might have a minor impact on some images, and cause more dramatic changes in others.

Some Artists and Modifiers

Here are some examples of artists and modifiers that you might try using in your own prompts. There’s no limit though — feel free to experiment with using the names of artists you like, or any modifiers that could describe the kinds of images you want to create.

Example Artists

Fantasy Landscape

- Tyler Edlin

- Mark Simonetti

Fantasy Characters

- Justin Gerard

- Wayne Barlowe

- Victor Adame Minguez

- Jesper Ejsing

- Gerald Brom

- Greg Rutkowski

- Frank Frazetta

Classics

- Da Vinci

- Pablo Picasso

- Van Gogh

- Winslow Homer

- M.C. Escher

- Alphonse Mucha

Sci-Fi

- Jim Burns

- John Harris

- Dean Ellis

- H.R. Giger

Anime

- Studio Ghibli

- Makoto Shinka

Example Modifiers

- 4k resolution

- 8k resolution

- Unsplash photo contest winner

- Trending on artstation

- Deviantart

- #pixelart

- 3d art

- Digital painting

- Blender

- Octane Render

- Unreal engine

- Watercolor

- Oil painting

- Acrylic painting

- Shot on film

- 35mm lens

- Portrait photography

- Portrait

- Character design

- Concept art

- Vibrant

- Textured

- Haze

- Highly detailed

- Intricate

- Cinematic lighting

- Dystopian feel

- Vaporwave

- Birds eye view

- Fisheye lens

- Drone photo

- Mixed media

Weaknesses

AIs that can take descriptions and turn them into images didn’t even exist a couple years ago, so the technology is brand new and advancing quickly. This means that it’s still got some glaring weaknesses in these early days.

Multiple Subjects

Try to keep the subjects and concepts that you’re asking it to portray fairly simple. If you ask for more than one subject, it’s likely to meld together features of those subjects into a mish-mash. For example, this image was made from the prompt “The Avengers”:

The Avengers (seed 1541803819)

I’m not worried about copyright infringement by posting this here, because none of these look anything like Marvel’s characters. Instead each one is a random combination of features from various Avengers.

Hands, Limbs, Faces

Often the AI will generate images of hands, limbs, and faces that are deformed, missing parts, or have extra fingers and appendages. This is especially the case if it’s a busy image with a lot of things going on other than those features.

Here’s an image generated from the prompt “A warrior sorceress casting a spell.” As you can see the face is somewhat iffy, and while there appears to be no left arm there are three hand-like things.

A warrior sorceress casting a spell (seed 1213007166)

Often the only solution for this is to generate another image using the same prompt. Eventually, it usually can get all the features roughly correct.

Seeds

Stable Diffusion creates an image by starting with “white noise” – a picture filled with nothing but random light and dark pixels. It then gradually adjusts each pixel, so that the image more closely resembles what the prompt describes. It does this repeatedly until it has a finished image.

Because it works this way, it’s not just the prompt that determines what the resulting image will look like; the specific arrangement of random pixels that it starts with also has a big influence. That random arrangement of starting pixels is generated from a single number, called a seed.

A seed is a number that can range from 0 to 4,294,967,295. If you generate an image twice using both the same prompt and the same seed, you’ll get exactly the same image each time. But if you change just the seed, or any part of the prompt, the image will be different. This means that for any prompt you can think up, there are over 4 billion different images that Stable Diffusion can make from it, one for each possible seed value! And given that there’s virtually no limit to the number of possible prompts, it’s easy to see that the number of different images that can be created is basically infinite.

Every time you generate a puzzle image in Puzzle Together, a seed value is chosen randomly. That way you’ll get a different image each time you generate, even for the same prompt. But, what if you like an image but want to just refine it a little bit by changing elements of the prompt? You can do that by specifying the seed value. This way you can re-use the same seed as often as you’d like, rather than having Puzzle Together come up with new seeds each time.

To specify the seed value when you generate a puzzle image, just include “seed:” followed by the seed value, in your prompt. For example:

hd photo of fruit seed:92734991

So, how do you find out the seed value for a puzzle image that you’ve generated in Puzzle Together? Once you’ve generated the custom puzzle, press its Play button. On the next screen where you select the puzzle settings, note that there’s a down-arrow icon in the lower right corner of the puzzle image. Press this button to download the image to your computer. Then look at the image’s filename; it will look something like this:

puzzletogether_5228_3597122867_A-goat-in-a-tuxedo–portrait-by-Renoir.jpg

The second number in the filename, in this case 3597122867, is the seed used to generate the image. So now if you went back into Puzzle Together and entered an image description like this:

A goat in a tuxedo, portrait by Renoir seed:3597122867

…then you should generate the exact same image again. You can now make changes to the prompt to refine the image to your liking, without having to start from scratch each time.

Here is an example of a basic prompt that I started with, and then a couple of variations I made by re-using the same seed and trying different changes to the prompt:

Magical faerie village deep in the forbidden forest. (seed 4066879483)

Magical faerie village deep in the forbidden forest, intricate, highly detailed, 8k, textured, art by artgerm and greg rutkowski and alphonse mucha (seed 4066879483)

Magical faerie village deep in the forbidden forest, Artstation, Octane render, Oil Paint, Supplementary-Colors, Full-HD, Rim Lights, insanely detailed and intricate, hypermaximalist, elegant, ornate, hyper realistic, super detailed (seed 4066879483)

Ownership

Images you generate within Puzzle Together are in the public domain (Specifically CC0 1.0 Universal Public Domain Dedication). You are free to download them, modify them, publish them, or do whatever you’d like with them.

Useful Links

There are many resources online full of ideas for getting the most out of your Stable Diffusion prompts. Here are a few:

Strikingloo’s Prompt Examples and Experiments

A Traveller’s Guide to Latent Space

Stable Diffusion Akashic Record

Examples

Here are a bunch of examples of nice images, along with the prompts used. These are just some ideas to spark the imagination; the possibilities are truly limitless. The seed values are also provided in case you want to generate the exact image, or create variations by refining the prompt.

a beautiful and stunning digital render of a humongous diamond cave, vines, haze, waterfall, volumetric lighting, hyperrealistic, green, blue sky, sunset, unreal engine 5, ultra detail, trending on artstation (seed 416518685)

Modern Mediterranean luxury house interior, modern, realistic, cinematic lighting, finely detailed features, perfect art, trending on artstation, painted by greg rutkowski, makoto shinkai, takashi takeuchi, akihiko yoshida. (seed 421573114)

Modern Mediterranean luxury house interior, modern, realistic, cinematic lighting, finely detailed features, perfect art, trending on artstation, painted by greg rutkowski, makoto shinkai, takashi takeuchi, akihiko yoshida. (seed 3524178185)

Mini worlds of the little people, faery folk, animals, simple lives, mixed media, highly detailed, charming, art style of beatrix potter and asher brown durand and jack davis (seed 821987241)

Mini worlds of the little people, faery folk, animals, simple lives, mixed media, highly detailed, charming, art style of beatrix potter and asher brown durand and jack davis (seed 1887390308)

Mini worlds of the little people, faery folk, animals, simple lives, mixed media, highly detailed, charming, art style of beatrix potter and asher brown durand and jack davis (seed 2662018045)

hd photo of fruit (seed 832847729)

female wizard by Alphonse Mucha, fantasy, magical, vibrant (seed 931092543)

rainy night in a cyberpunk city with glowing neon lights, birds eye view, fisheye lense, dramatic clouds, dystopian feel, digital painting, in the style of digital painting, studio ghibli, matte painting, unreal engine, game concept art (seed 869217125)

rainy night in a cyberpunk city with glowing neon lights, birds eye view, fisheye lense, dramatic clouds, dystopian feel, digital painting, in the style of digital painting, studio ghibli, matte painting, unreal engine, game concept art (seed 997108730)

A beautiful rococo painting of a Persian woman covered in peacock feathers standing before a red mosaic wall. , Artstation, Octane render, Oil Paint, Supplementary-Colors, Full-HD, Rim Lights, insanely detailed and intricate, hypermaximalist, elegant, ornate, hyper realistic, super detailed (seed 1305033464)

steampunk workshop (seed 1516845459)

britney spears as a warrior queen, intricate, highly detailed, 8k, textured, art by Frank Frazetta and greg rutkowski (seed 1587512074)

britney spears undead zombie, intricate, highly detailed, 8k, textured, art by Frank Frazetta and greg rutkowski (seed 4283569538)

A lone police officer overlooking a ledge towards the city below in Ghostpunk New York City | highly detailed | very intricate | cinematic lighting | by Asher Brown Durand and Eddie Mendoza | featured on ArtStation (seed 1750402234)

A lone police officer overlooking a ledge towards the city below in Ghostpunk New York City | highly detailed | very intricate | cinematic lighting | by Asher Brown Durand and Eddie Mendoza | featured on ArtStation (seed 2516874501)

realistic beautiful woman in a stylish bohemian dress, many accessories, in a stunning outdoor landscape, detailed, intricate, watercolor, mixed media, vibrant palette, art style by Tamara de Lempicka and John Singer Sargent, and Elizabeth Murray, pastel palette (seed 2719032760)

dramatic image of a vampire in front of the moon, cinematic lighting, realistic anime, gothic architecture, spooky atmosphere, steampunk, clouds, airship, art style by Ashi Productions and Studio Ghibli (seed 1968798196)

Warrior sorceress casting a fireball spell, intricate, highly detailed, 8k, textured, art by Alphonse Mucha, Gustav Klimt (seed 2929347455)

Warrior sorceress casting a fireball spell, intricate, highly detailed, 8k, textured, art by Frank Frazetta and greg rutkowski (seed 2929347455)

hd photo of desserts (seed 3123222892)

hd photo of desserts (seed 3586284766)

hd photo of desserts (seed 2664707392)

hd drone photo of a hedge maze (seed 3183957293)

the vast lonely abyss (seed 3208025507)

white Pegasus a mythical winged divine horse flying (seed 3615119831)

Steve Buscemi as Super Mario

hd photo of Halloween haunted house (seed 1329019797)

hd photo of Halloween haunted house (seed 3971880333)